Use this to select the output to be generated by fitting a neural network, and options used in the analysis.

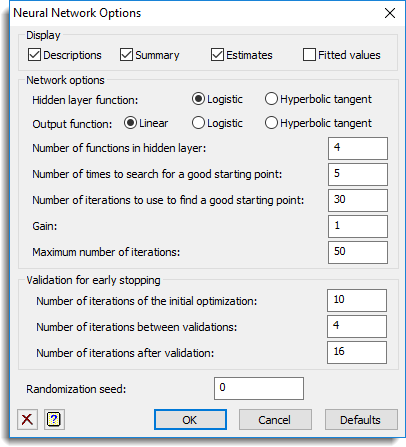

Display

Specifies which items of output are to be displayed in the Output window.

| Description | A description of the network. |

| Summary | Summary of the network fitted. |

| Estimates | Estimates from the network fitted. |

| Fitted values | The fitted values from the network fitted. |

Hidden layer function

The function to use for the activation function in the hidden layer:

| Logistic | Use the logistic function 1/(1 + exp(-gx)) where g is the Gain |

| Hyperbolic tangent | Use the hyperbolic tangent function tanh(gx) where g is the Gain |

Output function

The function to use for the activation function in the output layer:

| Linear | Use the linear function z |

| Logistic | Use the logistic function 1/(1 + exp(-gx)) where g is the Gain |

| Hyperbolic tangent | Use the hyperbolic tangent function tanh(gz) where g is the Gain |

Number of functions in hidden layer

This gives the number of nodes in the hidden layer. Each node is connected to the nodes in the output layer, with one output node for each variable selected. Increasing the number of nodes will increase the flexibility of the model, but also make it more complicated and prone to over-fitting. This value must be a positive integer.

Number of times to search for a good starting point

This number of random starting points are selected for the initial weights in the model. Increasing this will tend to improve the starting point used in fitting the model, but will increase the time for the model to be fitted. This value must be a positive integer.

Number of iterations to use to find a good starting point

For each random starting point for the weights, this number of iterations of the conjugate-gradient function are used to improve the weights. The best set of weights from the final iteration for each random starting point is then used for the starting point in the main optimization loop. Increasing this will tend to improve the best starting point used in fitting the model, but will increase the time for the model to be fitted. This value must be a positive integer.

Gain

The value of the gain to be used in the activation functions in the hidden and output layers. The value must be positive.

Maximum number of iterations

This sets a limit on the number of iterations the conjugate-gradient function can use in the estimation of the neural network. This value must be a positive integer.

Validation for early stopping

To improve the accuracy of the neural network approximations to new data records, it is usually desirable to stop the optimization before the value of the objective function reaches a global minimum on the training set. This method, which is known as early stopping, and can be performed by using a validation set of data records, specified by Validation dataset fields on the menu. The optimization is then halted when the sum of squares error function achieves a minimum over the validation set of data records which has not been used to estimate the values of the free parameters in the model. The options below control validation for early stopping.

| Number of iterations of the initial optimization | The number of iterations of the optimizing function to complete before beginning validation |

| Number of iterations between validations | The number of iterations between consecutive validations |

| Number of iterations after validation | The number of iterations to continue validating beyond the current minimum of the objective function before stopping |

Randomization seed

This gives a seed to initialize the random number generation used for bootstrapping and cross-validation. Using zero initializes this from the computer’s clock, but specifying an nonzero value gives a repeatable analysis.

See also

- Neural Network

- Save Options for choosing which results to save

- NNFIT directive